In machine learning we often hear the term Natural Language Processing. It is a very broad field that tackles a variety of problems related to working with text. But how do we process a natural language using a computer which doesn’t understand anything but numbers? To do this, we need to somehow transform what is written with words into something we can use in our computations.

There are – as usual in machine learning– a lot of ways to achieve this, but they all share a common concept: word embeddings. The idea is to embed our vocabulary in a vector space. By this, we mean assigning a vector of numbers to each word in a dictionary. We would ideally like to do it in a way that assigns similar vectors to words closely related in a natural language. This would enable, for example, the automatic finding of similar words just by computing distances in a vector space- something a computer can do very easily and fast.

Nice Idea, But…

…how can we actually find those vectors? This is certainly too much work to do manually. Fortunately, computers can not only analyze text already transformed to numerical form but also perform this transformation! Computing word embeddings is itself a machine learning task. We can ask a machine: which numerical representation of this text would be the most convenient for you to work with? All we need to think about is how to formulate this question.

What is Word Vectorization

- Computers can help us in working with text data…

- …but they understand only numbers.

- Let’s transform words into numbers!

- Idea: words used in similar contexts should have similar numerical representations.

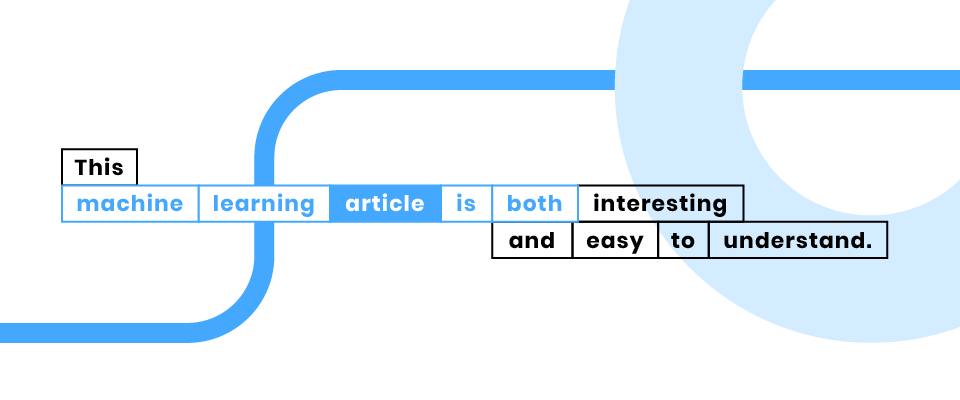

In 2013, scientists at Google published word2vec – an approach that became an important milestone in NLP. The first thing we need, like in every ML task, is data – in our case, this means a lot of text. We split the text into 5-word chunks, then by looking at them we can see how the words occur near each other in the natural language.

Once we have a dataset, we need a loss function or a way to tell the machine how well it is learning. In word2vec, we ask the computer to fill in the gaps. The machine is presented with “machine learning ____ is both” and we expect it to correctly guess which word is missing.

Side note: while we would like the machine to guess the correct word every time, in practice this is impossible. In the example above we could fill the gap with “article”, but also “job”, “algorithm”, and many other words. The best a computer can do is to give us a percentage chance for each word in a dictionary. This tells us which words are the most likely to be the right answer.

All that is left is to express this question using a mathematical formula and optimize its parameters. That goes beyond the scope of this short text, but one question still calls for an answer. The whole procedure was supposed to give us the word embeddings, so where are they?

Understanding words as vectors

The trick is as follows. In the beginning, we tell the machine to understand words as vectors. We do it by assigning each word a random vector. Those vectors then become parameters of our loss function. When the parameters slowly change during the optimization, the word vectors change too. Experiments show that after the learning process ends, they are useful numerical representations of a dictionary.

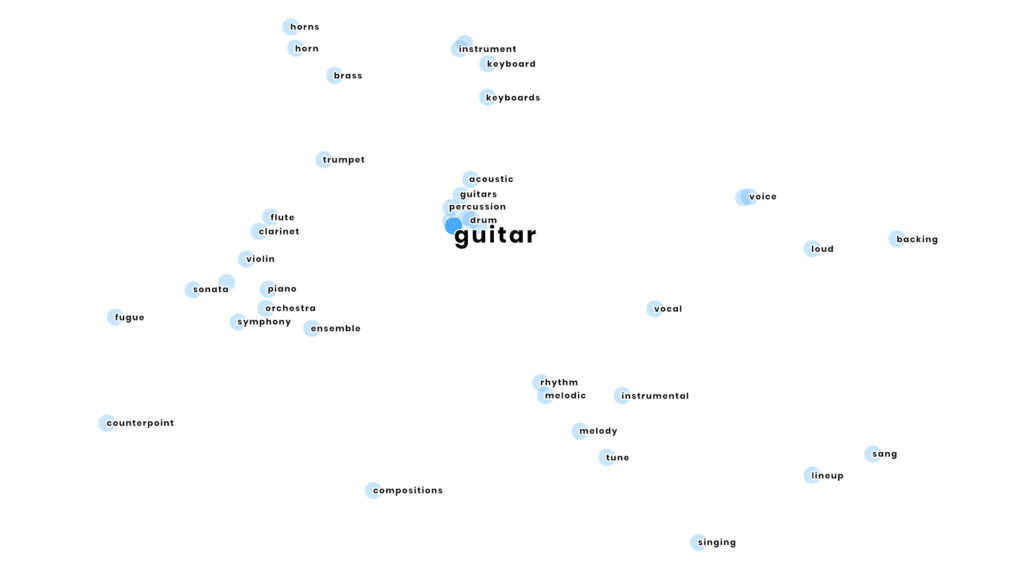

Now that we can represent words in numerical form, we can perform all sorts of computational tasks. This lets us tackle problems like phrase and document clustering, finding similarities between words or entire texts, generating sentences in natural language, sentiment analysis, making cool visualizations, and much more. We can also create cool visualizations, like the one below. It shows the nearest neighbors of the word “guitar” measured in a vector space. We can see that it is what we would expect: things and phrases that go together in real life are placed close to each other.

What Else There Is?

Word2vec is simple and fast, which makes it still useful today. However, there are many more modern and much more complex ways to compute word embeddings. Recent years have brought us transformer architectures, like BERT and GPT, which introduced transfer learning to NLP. Their creators combine attention mechanisms with huge computational resources available today to make machines understand natural language better than ever before.

If you want to learn how we used word vectorization in a particular project, click to read our case study.

In Stermedia, we make use of both simple approaches like word2vec and more sophisticated ones, like BERT, since each has its pros and cons. Fortunately, we don’t have to implement everything ourselves, as there are a lot of great libraries to play with. Most notably, we’ll mention gensim, NLTK, spaCy, flair, and 🤗 Transformers (yes, the hugging face is a part of the official name).

- gensim: https://radimrehurek.com/gensim/

- NLTK: https://www.nltk.org/

- spaCy: https://spacy.io/

- flair: https://github.com/flairNLP/flair

- 🤗 Transformers: https://github.com/huggingface/transformers

How It Works – In Brief

- Take a lot of text, split it into 5-word chunks.

- Start with assigning a random vector to each word in a dictionary.

- Ask the machine to solve many open cloze tests. With each example, it learns something about the language. In scientific terms, it is called optimizing a loss function.

- During optimization, machine learns how to represent words as vectors.

- This is word2vec – a simple and useful algorithm for word vectorization.

What Can We Use It For

- Clustering

- Finding similar words or documents

- Extracting information from text

- Generating text automatically

- Creating visualizations

- Everything else that requires performing computations on words

About the Author

Maciej Pawlikowski – Data Scientist. He holds a degree in both math and computer science. Interested mostly in natural language processing. Maciej also worked successfully in time series forecasting. Big enthusiast of optimization and code style. Always on the hunt for more efficient and convenient tools for data science and coding.