We met again at the weekly events of the Lightning Talks series. It is a grassroots initiative of colleagues from development teams consisting in sharing knowledge in the form of short online meetings.

This time, on February 1st, we learned a bit more about managing projects with MLflow. On February 8, on the other hand, we heard a lecture on ZeRO-Offload – the algorithm that enables multi-billion parameters model training on a single GPU.

Managing projects with MLflow

The third meeting in the series was led by a talented data scientist, Michał Krasoń. Michał tested his skills at international competitions such as Kaggle Days China 2019.

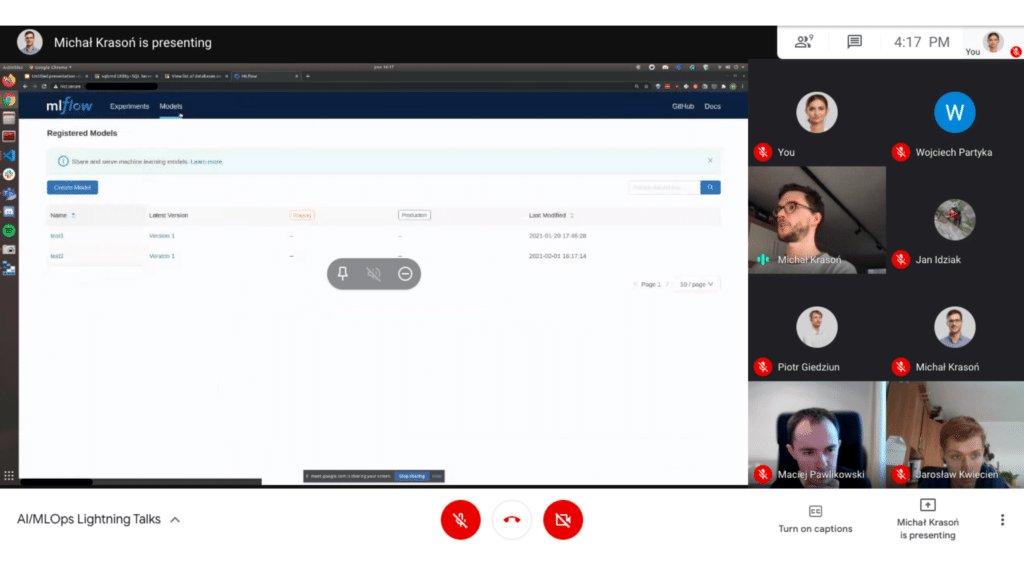

The topic of his webinar was MLflow – an open-source library that helps in organizing machine learning experiments. “It provides us with a suite of tools to manage project dependencies, metrics and models storage as well as deployment” – says Michał.

We took a quick tour of its main functionalities – Projects, Tracking, Models, Registry, and then had a closer look at specific use cases in a demo implementation of the MLflow server.

Training huge models with ZeRO-Offload

During the fourth meeting, the speaker was Radosław Lemiec, another gifted data scientist from Stermedia. Radosław told us about ZeRO-Offload – Pytorch oriented algorithm that enables multi-billion parameters model training on a single GPU. By offloading part of the data and computation to CPU one can reduce memory consumption while maintaining stable throughput.

Additionally, ZeRO-Offload can achieve a nearly linear increase in performance as the number of GPUs increases. ‘Altogether it aims to solve one of the biggest blockers in deep machine learning’ – says Radosław – ‘a requirement for powerful and expensive hardware’.

Both meetings last 45 minutes – 15 minutes are dedicated to the presentation and the remaining time for discussion. This short-form allows you to maintain maximum attention with a lot of time for asking questions and dispelling doubts. It also attracts many participants. Thanks to the clarity of the message, also non-AI / ML people had the opportunity to understand and learn new skills.

Stay tuned!

Are you inspired?

Let’s talk about your idea.

Let’s talk