Quality Assurance is revolutionizing industries by driving automation, efficiency, and precision. However, behind every successful implementation lies not just a brilliant algorithm—but above all, quality.. And not just as a final check, but as a QA process that accompanies the AI project from day one. Experts agree: Quality Assurance accounts for up to 75% of the work in AI projects.

What is Quality Assurance in the Context of AI?

In traditional software development, QA ensures that functionality aligns with requirements—code is supposed to behave the same way every time. In AI, we deal with statistical and non-deterministic models whose behavior may vary even with identical inputs. This makes classical QA approaches obsolete.

In AI, QA includes:

- Verifying input data (quality, completeness, class balance),

- Monitoring the training and validation process of the model,

- Evaluating outcomes using appropriate statistical metrics,

- Detecting bias and flawed model assumptions,

- Ongoing production monitoring.

Three Pillars of Quality Assurance in AI

Pre-training Phase – Data is Key

This is the most crucial and time-consuming stage. AI models learn from the data we feed them. If data is incomplete, noisy, imbalanced, or biased, the model will learn exactly that.

For example, recruitment AI systems have discriminated against women because they were trained on historical data dominated by male applicants.

Training and Evaluation Phase – Tackling Non-Determinism

Unlike traditional code, AI models can provide different responses to the same input—language models, for instance, generate varied outputs depending on temperature settings.

QA here involves evaluating models with metrics such as:

- Accuracy – deceptively universal (e.g., ineffective for rare conditions like cancer),

- Precision, Recall, and F1-score – measure false positives/negatives effectively,

- Weighted metrics – account for class imbalance.

Overfitting is another threat—models may perform well on training data but poorly on real-world data. That’s why we use separate validation and evaluation datasets to tune hyperparameters and assess real generalizability.

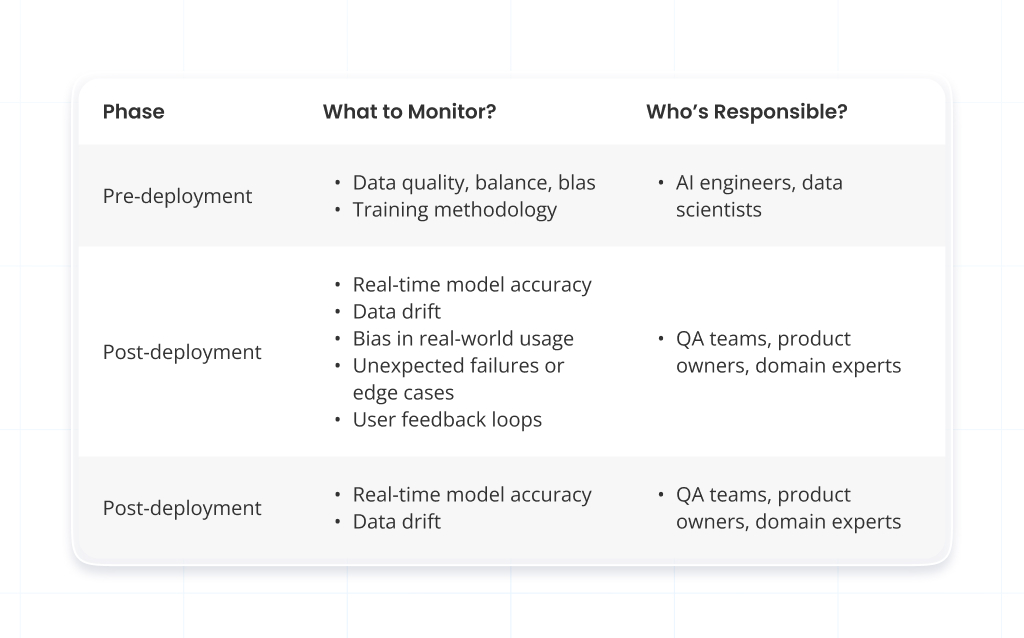

Production Phase – Monitoring After Deployment

A deployed model is not a forgotten model. AI requires continuous monitoring:

- Is the model still performing correctly?

- Has the input data distribution shifted (so-called data drift)?

- Are new problem types emerging?

QA at this stage may involve both automated production tests and periodic reviews by domain experts. Learn more about the importance of post-deployment monitoring in AI projects in this external article from Ada Lovelace Institute.

When Data is Missing – Expert-Based Quality Assurance

In projects lacking large labeled datasets (e.g., expert systems using language models), traditional QA doesn’t apply. Domain experts must manually assess model outputs:

- The model generates results based on input data.,

- An expert (e.g., a doctor or lawyer) reviews them, often using simple tools like Excel,

- Corrected data is sent back to AI engineers,

- New data is used to retrain the model.

This iterative learning continues until the model achieves satisfactory performance.

Step-by-Step QA Process

Understanding the Problem and Client Needs

QA starts by thoroughly understanding the business goal and context in which the model will operate—this helps choose the right data and evaluation metrics.

Data Collection and Preparation

Data is gathered from internal or external sources and assessed for relevance and completeness.

Balancing and Cleaning the Data

Errors and anomalies are removed, and class balance is ensured to avoid unwanted biases in the model.

Splitting the Dataset: Training (70%), Validation (20%), Evaluation (10%)

This ensures model quality assessment without the risk of data leakage. In practice, ratios may vary depending on available data volume, task complexity, and resource constraints.

Model Selection and Training

The appropriate model architecture is chosen and trained on the training dataset, with parameters tuned to fit the problem.

In-Training Validation

Ongoing validation helps detect overfitting early and monitor performance in real time.

Post-Training Evaluation (on unseen data)

A final stress test using new data shows how the model performs in real-life scenarios after deployment.

Model Deployment

The model goes live in a production environment, processing real user or system data.

Production Monitoring (Automation + Experts)

Alert systems and expert reviews help detect quality drops and unexpected behavior quickly.

Iterative Improvement

The model is continuously updated with new data and feedback to maintain accuracy and relevance over time.

Summary

Quality Assurance in AI Software Development is not a supporting role but the foundation of success. Regardless of how advanced a model’s architecture is, data quality and verification methods ultimately determine its effectiveness. AI engineers today often wear the QA hat themselves—but when technology alone isn’t enough, domain experts become essential. Because only through the collaboration of humans and machines can we achieve true quality in artificial intelligence.

Want to see how a real AI development team approaches QA? Visit our Quality Assurance page at Stermedia and explore how we ensure trust and performance in AI.